Service Node Setup¶

This guide explains step-by-step how to setup a Service Node. Operating a Service Node requires 5000 BLOCK collateral. This 5000 BLOCK collateral will still be in your wallet and in your control, but must not be moved or spent in order for the Service Node to remain valid and active. However, you may still use this collateral to participate in staking.

Operating as a Service Node requires two Blocknet wallets:

- Collateral Wallet - This wallet will contain the 5000 BLOCK collateral and does NOT need to remain online and unlocked unless the 5000 BLOCK is being staked or you are voting on a proposal.

- Service Node Wallet - This wallet will act as the Service Node and must remain online and open at all times.

Hardware Requirements For Service Node Wallet¶

Hardware Requirements For Service Node Wallet

Hardware requirements for a Service Node vary depending on which SPV wallets and services your node will support.

Minimum System

Probably the minumum HW requirements for any Service Node would be:

- 4 CPU cores (or 4 vCPUs if the Service Node runs on a VPS)

- 8 GB RAM

- 200 GB SSD Storage (doubtful that slower, HDD drives would work).

- 25+MBit/s Internet download speed. (100+ MBit/s is much better for faster syncing.)

Such a system could support a few small SPV wallets and a Blocknet staking wallet.

Medium & Large Systems

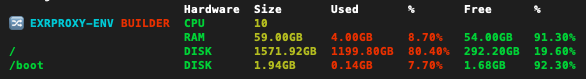

If you want to host the Hydra and/or XQuery Indexer services (and maybe a few SPV wallets as well), you'll likely need at least 10 vCPUs, 60 GB of RAM and 1.3 TB+ of SSD storage space. Hosting Hydra or XQuery services requires hosting an EVM (Ethereum Virtual Machine) blockchain like Ethereum/ETH, Avalanche/AVAX, Sys NEVM, Binance Smart Chain/BSC, Fantom/FTM, Solana/SOL, Polkadot/DOT, Cardano/ADA, etc. So far, testing of XQuery suggests it should be possible to host XQuery services supporting EVMs like AVAX, Sys NEVM & BSC on HW with these same specs, though it may be necessary to add extra storage to support the rapidly growing AVAX blockchain.

Minumum HW requirements for a medium to large Service Node capable of supporting Hydra and/or XQuery Indexer services would be something like this:

- 10 CPU cores (or 10 vCPUs if the Service Node runs on a VPS)

- 60 GB RAM

- 1.3+ TB SSD Storage

- 25+MBit/s Internet download speed (100+ MBit/s is much better for faster syncing.)

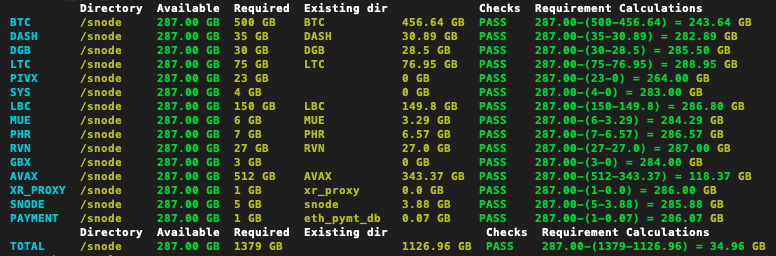

To give an estimate of how much storage space is required for various SPV wallets, here is a snapshot of approximate disk space utilizations taken May 18, 2022:

Snapshot of SPV wallet disk space utilizations taken April 8, 2022

| SPV wallet | Estimated Size (GB) |

|---|---|

| bitcoin | 500 |

| dogecoin | 70 |

| litecoin | 85 |

| dash | 35 |

| digibyte | 33 |

| raven | 32 |

| pivx | 22 |

| verge | 11 |

| crown | 9 |

| phore | 8 |

| unobtanium | 4 |

| lux | 4 |

| blocknet | 5 |

| terracoincore | 4 |

| alqocrypto | 4 |

| monetaryunit | 5 |

| syscoin | 6 |

| merge | 4 |

| zenzo | 4 |

| goldcoin | 3 |

| egulden | 3 |

| stakecubecoin | 2 |

VPS Provider Options for Small, Medium & Large Systems

If you want to compare prices between VPS service providers, you can compare the offerings of companies like Digital Ocean, Contabo, Vultr, Amazon AWS, and Google Cloud Computing. A Google search for "VPS Hosting Service Provider" will show many more options. As of this writing, Contabo seems to offer the most hardware for the money, but it's always good to do a little comparison shopping.

Extra Large System for supporting ETH archival node

To support Hydra and/or XQuery Indexer services of the Ethereum/ETH blockchain, a Service Node must host an ETH archival node. In terms of CPU and RAM requirements, running an ETH archival node requires:

- 8 CPU Cores (or 8 vCPUs if the ETH archival Node runs on a VPS)

- 16 GB RAM

The storage space requirements for an ETH archival node are a bit more demanding. The Go-Ethereum (GETH) archival node, which is the core wallet needed to run an ETH archival node, occupies about 8TB of space as of this writing (July 17, 2021). Furthermore, it is growing by 3TB per year. (Its current size can be found here.) Therefore, a Service Node supporting an ETH archival node should probably have at least 10TB for ETH alone, plus maybe another 1.5-2TB for running other SPV wallets. It should also have the ability to expand its storage space by 3TB per year.

Update Sept. 27, 2021: Some Blocknet community members are researching the possibility of using the Erigon ETH archival node instead of the Go ETH (GETH) archival node. This is promising research as the Erigon ETH archival node occupies only about a quarter of the space of the GETH archival node. In other words, only about 2TB instead of 8TB as of this writing. Erigon ETH archival node also syncs in about one quarter the time it takes GETH to sync. As of this writing, it appears Erigon ETH may well be able to support Hydra services of ETH in its current stage of development, but until Erigon has standard ETH filter methods, it won't support XQuery services of ETH. Please join discussions in the #hydra channel of Blocknet Discord to keep up with the latest developments on Erigon ETH.

It's also important to note that the storage for the ETH full archival node must be very fast. In other words, it must use SSDs, not HDDs. There are 3 types of SSDs: SATA, SAS and NVMe/PCIe. Of the 3, SATA are the slowest, SAS are a little faster, and NVMe are by far the fastest. NVMe are definitely the preferred variety of SSD drive when it comes to hosting an ETH archival node. In fact, it looks doubtful that SATA or SAS SSD drives will be fast enough to allow the ETH node to sync.

It is also recommended that the SSDs used for an ETH archival node be configured in a RAID mirror configuration (e.g. RAID-1, RAID-10, RAID-Z2). Without RAID mirroring, an SSD failure will almost certainly mean you'll have to resync the entire ETH full archival node, which takes over a month for a GETH node (but probably only a quarter of that time for an Erigon ETH node). Your ETH archival node will be offline for the duration of the resync.

As of this writing, it's not clear that any of the VPS Provider Options mentioned above are capable of providing a VPS which meets the HW requirements for an ETH archival node, or if they are capable, the cost can be a bit extreme and it's not clear they can expand storage space as needed to support the growing ETH full archival node. There may well be some smaller VPS providers who are capable of both meeting current HW requirements and allowing for storage space expansion in the future. There are also efforts underway to coordinate "package discounts" from such VPS provider(s) for a person or group of people to rent a number of ETH archival-capable VPS's at a discounted rate. Please join discussions on this topic in the #hydra channel of Blocknet Discord.

Another option for meeting the HW requirements of an ETH archival node is to purchase your own hardware and run it at home or in a colocation hosting data center. (Colocation hosting services can be found for USD $50 per month per server, as of Feb., 2022.) If purchasing your own SSD drives, be aware that ETH core will be writing to your SSDs continuously, so you'll want to get SSDs with a high "durability" rating. For example, a company called Sabrent offers an 8TB NVMe SSD. On the surface, it may look like a good choice to use for building a system with lots of fast storage space. Unfortunately, this particular drive only has a TBW (Total Bytes Written) durability rating of 1,800 TB TBW, which, according to this review makes it not very suitable to be used in an application that writes to it frequently. (Note, Samsung NVMe drives and/or any NVMe drives rated for industrial use are probably quite durable and suitable for supporting an archival ETH node.) More discussions about HW details can be found in the #hydra channel of Blocknet Discord.

Running a Collateral or Staking Wallet and a Service Node Wallet on the same computer¶

Click Here to learn about running a Collateral or Staking Wallet and a Service Node Wallet on the same computer. Do this before setting up your Service Node.

Service Node operators will often want to run a Collateral Wallet or a Staking Wallet on the same computer as their Service Node Wallet. In almost all cases, the Collateral Wallet will also be staking, so we can say it is also a Staking Wallet. A Staking Wallet, however, is not always used as a Collateral Wallet.

To run a Staking Wallet and a Service Node Wallet on the same computer, follow these steps:

- Set up your Staking Wallet. Here is an example guide for setting up a Staking Wallet on a VPS running Ubuntu Linux. The Hardware Requirements for a Service Node Wallet should be followed when selecting a VPS as per that guide.

- Stop your Staking Wallet. If your Staking Wallet has been set up

according to

the VPS Staking guide,

and the alias for

blocknet-clihas also been set up according to that guide, you can stop your Staking Wallet by issuing the follow command:blocknet-cli stop -

Change the Peer-to-Peer (P2P) port and the RPC port of your Staking Wallet so they don't conflict with the P2P port and the RPC port of your Service Node Wallet. To do this on a Linux system,

- Change directory to your Staking Wallet data

directory. If your Staking Wallet has been set up according to

the VPS Staking guide,

your Staking Wallet data directory will be

~/.blocknet. For Example:cd ~/.blocknet - Use a simple text editor like

vi

or

nano

to edit the wallet configuration file,

blocknet.conf. For Example:Note, editingnano blocknet.confblocknet.confwill createblocknet.confif it doesn't already exist. - Add two lines like the following to your

blocknet.conf:Note: Theport=41413 rpcport=41415portandrpcportvalues you set in yourblocknet.confdon't have to be 41413 and 41415, as in the example above; they just have to be port numbers which are not used as eitherportorrpcportin the default Blocknet wallet configuration. Therefore you must not use 41412 or 41414, which are the default values forportandrpcport, respectively. You should also not use the defaultportorrpcportvalues for Blocknet testnet, which are 41474 and 41419, respectively. Incrementing the default Blocknetportandrpcportvalues by 1, as in the example above, is a pretty safe strategy for avoiding potential port conflicts. Not only does this avoid potential port conflicts with the Blocknet testnet, but also with other SPV wallets running on your Service Node. - Save your edits to

blocknet.conf, exit the editor and restart your Staking Wallet. If your Staking Wallet has been set up according to the VPS Staking guide, and the aliases forblocknet-cli,blocknet-daemonandblocknet-unlockhave also been set up according to that guide, you can restart your Staking Wallet and start it staking again by issuing the follow commands:(You'll need to wait a few minutes after starting the Blocknet daemon for your wallet to sync the headers. Then you'll be allowed to unlock your wallet for staking in the next step.)blocknet-daemon - Unlock your staking wallet for staking only:

(Enter your wallet password when prompted.)

blocknet-unlock - Confirm your wallet is staking by issuing the command:

When this command returns,

blocknet-cli getstakingstatus"status": "Staking is active", then you know your wallet is staking properly. Note, you may also want to confirm your staking wallet balance is correct with:blocknet-cli getbalance

- Change directory to your Staking Wallet data

directory. If your Staking Wallet has been set up according to

the VPS Staking guide,

your Staking Wallet data directory will be

-

If you will be setting up your Service Node following the Enterprise XRouter Environment Service Node Setup Guide, there is nothing more you need to do regarding your Staking Wallet and you can proceed directly to the Enterprise XRouter Environment Service Node Setup Guide. However, if you will be setting up your Service Node following the Manual Service Node Setup Guide, you'll need to move your Staking Wallet data directory to allow the Service Node Wallet data directory to occupy the default data directory location. To move your Staking Wallet data directory, follow these steps:

- Stop your Staking Wallet. If your Staking Wallet has

been set up according to the

VPS Staking guide,

and the alias for

blocknet-clihas also been set up according to that guide, you can stop your Staking Wallet with:blocknet-cli stop - Wait till your Staking Wallet stops completely. You

can monitor the Staking Wallet Linux process (called

blocknetd) by repeatedly pasting/issuing the following command:Before the Staking Wallet Linux process has stopped completely, that command will return something like this:ps x -o args | grep -v "grep" | grep "blocknetd"Continue issuing that/home/[user]/blocknet-4.3.3/bin/blocknetd -daemonps x -o argscommand until it returns nothing. Then you know theblocknetdprocess has stopped completely and it's safe to move your data directory. - Issue the following commands to move

(rename) your Staking Wallet data directory:

cd ~ mv .blocknet .blocknet_staking - Now, assuming your Staking Wallet access

aliases have been set up according to the

VPS Staking guide,

you'll need to change those alias definitions to reflect

the fact that your Staking Wallet data directory has

changed. To do so, use vi

or

nano

to edit the

~/.bash_aliasesfile. For Example:Leave the first line of the file as it is:nano ~/.bash_aliasesWithexport BLOCKNET_VERSION='4.3.3'4.3.3being replaced by whatever version of Blocknet wallet your Staking Wallet is running. Then change all the alias definitions to be as follows:export BLOCKNET_VERSION='4.3.3' # blocknet-daemon = Start Blocknet daemon for staking wallet alias blocknet-daemon='~/blocknet-${BLOCKNET_VERSION}/bin/blocknetd -daemon -datadir=$HOME/.blocknet_staking/' # blocknet-cli = Staking wallet Command Line Interface alias blocknet-cli='~/blocknet-${BLOCKNET_VERSION}/bin/blocknet-cli -datadir=$HOME/.blocknet_staking/' # blocknet-unlock = Unlock staking wallet for staking only alias blocknet-unlock='~/blocknet-${BLOCKNET_VERSION}/bin/blocknet-cli -datadir=$HOME/.blocknet_staking/ walletpassphrase "$(read -sp "Enter Password:" undo; echo $undo;undo=)" 9999999999 true' # blocknet-unlockfull = Unlock staking wallet fully alias blocknet-unlockfull='~/blocknet-${BLOCKNET_VERSION}/bin/blocknet-cli -datadir=$HOME/.blocknet_staking/ walletpassphrase "$(read -sp "Enter Password:" undo; echo $undo;undo=)" 9999999999 false' - Save your edits to

~/.bash_aliases, exit the editor, then activate your new alias definitions with:source ~/.bash_aliases - Restart your staking wallet with:

blocknet-daemon - Unlock your staking wallet for staking only:

(Enter your wallet password when prompted.)

blocknet-unlock - Confirm your wallet is staking by issuing the command:

When this command returns,

blocknet-cli getstakingstatus"status": "Staking is active", then you know your wallet is staking properly. Note, you may also want to confirm your staking wallet balance is correct with:blocknet-cli getbalance

- Stop your Staking Wallet. If your Staking Wallet has

been set up according to the

VPS Staking guide,

and the alias for

Enterprise XRouter Environment Service Node Setup - Docker based (Recommended)¶

Note: This Enterprise XRouter (EXR) Environment Service Node Setup Guide is for setting up a Service Node on a computer running Ubuntu Linux OS. Please adjust any Ubuntu-specific steps as necessary if setting up your Service Node on a different OS.

To setup your Service Node using the Enterprise XRouter Environment Service Node Setup, complete the following guides in order:

- Set up an Ubuntu Linux server

- Collateral Wallet Setup for EXR Service Node

- Deploy Enterprise XRouter Environment

- Maintenance of Enterprise XRouter Environment

Set up an Ubuntu Linux server¶

Set up an Ubuntu Linux server

Follow these steps to set up a Virtual Private Server (VPS) running Ubuntu 20.04.3 LTS Linux Operating System. Please make sure the VPS you set up meets the hardware requirements for a Service Node.

Note: The instructions below assume bash shell, the default shell for Ubuntu 20.04.3 LTS Linux, is used. Please adjust as necessary if a different shell is used.

- If you're new to the Linux Command Line Interface (CLI), learn the basics. You don't have to learn every detail, but learn to navigate the file system, move and remove files and directories, and edit text files with vi, vim or nano.

- Sign up for an account at an economical, reliable VPS provider. For example, you may wish to explore services available from VPS providers like Contabo, Digital Ocean, Vultr, Amazon AWS and Google Cloud Computing. A Google search for "VPS hosting provider" will yield a multitude of other options. You'll want to rent and deploy a VPS running Ubuntu 20.04.3 LTS Linux through your VPS provider.

- Follow the guides available from your VPS provider to launch

your Ubuntu VPS and connect to it via

ssh(from Mac or Linux Terminal) or viaPuTTY(from Windows). For example, here is a nice Quick Start Guide from Digital Ocean, and here is a guide from Contabo on connecting to your VPS. - The first time you connect to your VPS, you'll be logged in as

rootuser. Create a new user with the following command, replacing<username>with a username of your choice.You will be prompted for a password. Enter a password foradduser <username><username>, different from your root password, and store it in a safe place. You will also see prompts for user information, but these can be left blank. - Once the new user has been created, add it to the

sudogroup so it can perform commands as root. Only commands/applications run withsudowill run with root privileges. Others run with regular privileges, so type the following command with your<username>usermod -aG sudo <username> - Type

exitat the command prompt to end your Linux session and disconnect from your VPS. - Reconnect to your VPS (via

sshorPuTTY), but this time connect as the<username>you just added.- Using

sshfrom Mac or Linux Terminal:Example:ssh <username>@VPS_IP - Using

PuTTYfrom Windows, configure PuTTY to use VPS_IP as before, but this time login to your VPS with the<username>andpasswordyou just set.

- Using

- Update list of available packages. (Enter password for

<username>when prompted for the [sudo] password.)sudo apt update - Upgrade the system by installing/upgrading packages.

sudo apt upgrade - Make sure

nanoandunzippackages are installed.sudo apt install nano unzip -

Create at least as much swap space as you have RAM. So, if you have 16 GB or RAM, you should create at least 16 GB of swap space. Many recommend creating twice as much swap space as you have RAM, which is a good idea if you can spare the disk space. However, more than 16 GB of swap space may not be required. To create swap space:

Check if your system already has swap space allocated:swapon --show- If the results of

swapon --showlook similar to this:that means you already have some swap space allocated and you should follow this guide to allocate 1x-2x more swap space than you have GB of RAM.swapon --show NAME TYPE SIZE USED PRIO /swapfile file 2G 0B -2 - If the results of

swapon --showdo not indicate that your system has a swapfile of typefile, you'll need to create a new swap file with 1x-2x more swap space than you have GB of RAM. To do so, follow this guide

- If the results of

-

(Highly Recommended) Increase the security of your VPS by setting up SSH Keys to restrict access to your VPS from any computer other than your own. Those connecting via

PuTTYfrom Windows should first follow this guide to set up SSH Keys with PuTTY. Note: If you follow this recommendation to restrict access to your VPS via SSH Keys, back up your SSH Private key and save the password you choose to unlock your SSH Private Key.

Continue on to Collateral Wallet Setup for EXR Service Node.

Collateral Wallet Setup for EXR Service Node¶

Collateral Wallet Setup for Automated Service Node Setup

-

Create a new public address to hold collateral for your Service Node. It is recommended to create a new collateral address for each Service Node whose collateral will be stored in this wallet. A unique name for each collateral address will need to be chosen as an alias. We'll refer to this name below as ALIAS.

-

To create a collateral address on the GUI/Qt wallet, there are 2 options:

-

Select Address Book tab on the left side of the wallet, then select Create New Address at the top, then fill in the Alias field with your ALIAS and click Add Address.

-

Navigate to Tools->Debug Console and type:

For Example:getnewaddress ALIASThegetnewaddress snode01getnewaddresscommand will return a new address, likeBmpZVb522wYmryYLDy6EckqGN4g8pT6tNP

-

-

To create a collateral address on the CLI wallet:

For Example:blocknet-cli getnewaddress ALIASTheblocknet-cli getnewaddress snode01getnewaddresscommand will return a new address, likeBmpZVb522wYmryYLDy6EckqGN4g8pT6tNP

-

-

Create the inputs needed for the collateral address. Each address where collateral funds for a Service Node are stored must meet the following requirements:

Collateral Address Requirements¶

- There must be 10 or fewer inputs in the address whose sum is >= 5000 BLOCK. There can be other inputs in the address which don't factor into this calculation, but it must be possible to add 10 or fewer inputs in the address to achieve a sum of >= 5000 BLOCK.

- If you want the address to qualify for 1 Superblock vote (recommended), you should create one small input to the address, ideally around 1 BLOCK in size, which is separate from the inputs used to meet the 5000 BLOCK requirement listed above.

- If you want the address to qualify for 1 Superblock vote (recommended), you should also ensure that the set of inputs used to meet the 5000 BLOCK requirement are all 100 BLOCK or larger in size.

To fund a collateral address such that it meets these requirements, there are two options: Manual Funding or servicenodecreateinputs. Use one of these two options (a or b):

-

Manual Funding: (recommended) Each time you send BLOCK to the collateral address, it creates an input to the address whose size is equal to the amount sent. So, to meet all the above requirements, you could send 1 BLOCK to the collateral address, then send BLOCK in amounts of >=

500and <=5000BLOCK to the same collateral address until the sum of the >= 500 BLOCK inputs is >=5000.-

For example, you could send 1 BLOCK to the collateral address, then send 1250 BLOCK to the collateral address 4 times. After doing so, the collateral address would have 5 inputs: 1 input of 1 BLOCK and 4 inputs of 1250 BLOCK each. This would meet the above requirements.

-

As another example, you could send 1 BLOCK to the collateral address, then send 5000 BLOCK to the same collateral address. That would leave the collateral address with 2 inputs: 1 input of 1 BLOCK and 1 input of 5000 BLOCK. This would also meet the above requirements.

Note: If you are sending to the collateral address from the same wallet which contains the collateral address (and possibly other collateral addreses), it will be good to either lock the inputs of funds in collateral addresses, or send the funds using the Coin Controlled Sending feature to ensure the funds you're sending are not withdrawn from a collateral address you're trying to fund. It's easiest to perform coin controlled sending from the redesigned GUI/Qt wallet. However, if sending from a CLI wallet, you can use methods like

createrawtransactionandsendrawtransactionto accomplish coin controlled sending. Get help on using these methods in the CLI wallet with:

blocknet-cli help createrawtransaction

and

blocknet-cli help sendrawtransaction.Tip: Click here to learn how to see all inputs of a collateral address.

-

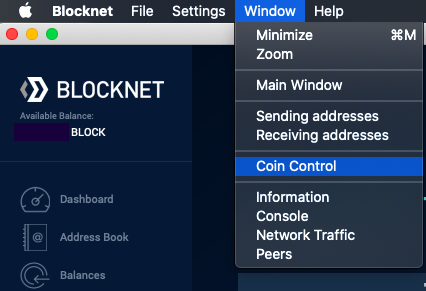

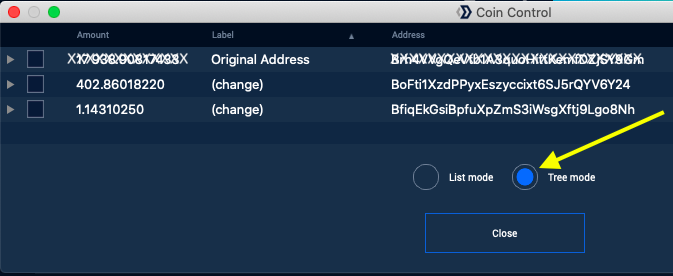

In a redesigned GUI/Qt wallet: Select Window->Coin Control to display the addresses and Inputs where funds are stored in your wallet:

This will display a screen like this (in Tree mode): On this screen you can click the small grey triangle

to the left of an address to see the

Inputs of the address.

On this screen you can click the small grey triangle

to the left of an address to see the

Inputs of the address. -

In a CLI wallet, or in Tools->Debug Console of the GUI/Qt wallet: Use the

listunspentmethod to list all wallet Inputs and their associated addresses.

From the CLI wallet:From Tools->Debug Console of the GUI/Qt wallet:blocknet-cli listunspentlistunspent

-

-

servicenodecreateinputs:

servicenodecreateinputsis a method/tool of the Blocknet core wallet which can facillitate creating a set of inputs in a collateral address which meets the above requirements. Not only does it automatically create a set of inputs which meets the requirements, it also automatically creates a 1 block input to the collateral address to make the address eligible for voting.

NOTE: If your wallet contains other collateral addresses which have already been funded, you'll want to lock the inputs of funds in all collateral addresses to preventservicenodecreateinputsfrom withdrawing funds from other collateral addresses when it's called.

NOTE: It is possible to useservicenodecreateinputsto set up collateral for multiple Service Nodes in a single collateral address. However, because this practice makes it difficult for tracking tools to associate collateral addresses with Service Nodes, the recommendation is to use a separate collateral address for each Service Node's collateral. Effectively, this means the recommendation is to always pass aNODE_COUNTparameter of1, or just leave theNODE_COUNTblank so it will default to1when callingservicenodecreateinputs. (See examples below.)

To use theservicenodecreateinputsmethod/tool, your collateral wallet should contain at least 1 BLOCK to cover the transaction fee of calling theservicenodecreateinputsmethod, and at least 5001 BLOCK for each Service Node collateral you want to set up. Once those conditions are met, you can use theservicenodecreateinputsmethod like this:

From Tools->Debug Console of the GUI/Qt wallet:From the CLI wallet:servicenodecreateinputs NODE_ADDRESS NODE_COUNT INPUT_SIZEIn these examples, replaceblocknet-cli servicenodecreateinputs NODE_ADDRESS NODE_COUNT INPUT_SIZENODE_ADDRESS,NODE_COUNTandINPUT_SIZEwith the desired values, as per the following definitions: -

NODE_ADDRESS= The collateral address created in step 1 above. -

NODE_COUNT= The number of Service Nodes for which to configure the collateral address. It is recommended to make this 1, or just leave it blank so it will default to 1. However, as mentioned, it is possible to configure a single collateral address to provide collateral for multiple Service Nodes. -

INPUT_SIZE= The amount of BLOCK for each input of the collateral address.-

Must be >=

500and <=5000. -

If left blank, it defaults to

1250. -

Example:

1000will create 5 inputs of 1000 BLOCK each (per Service Node)

-

Example which creates 4 inputs of 1250 BLOCK each in the collateral address, for a single Service Node :

Example which creates 2 inputs of 2500 BLOCK each in the collateral address, for a single Service Node :servicenodecreateinputs BmpZVb522wYmryYLDy6EckqGN4g8pT6tNPExample which creates 2 inputs of 2500 BLOCK each in the collateral address for each of 4 Service Nodesservicenodecreateinputs BmpZVb522wYmryYLDy6EckqGN4g8pT6tNP 1 2500This last example will result in 8 inputs of 2500 BLOCK each being created in the collateral address (plus a 1 BLOCK input for voting).servicenodecreateinputs BmpZVb522wYmryYLDy6EckqGN4g8pT6tNP 4 2500 -

Prepare to create (or add a new entry to) a

servicenode.conffile in your data directory. If theservicenode.conffile does not exist in your data directory, proceed to the next step. Otherwise, review the contents ofservicenode.confin an editor. If all the service node references in the file are out-of-date, exit the editor and delete the file. If some service node references are current/valid, but others are out-of-date, delete all lines containing out-of-date service node references, save the file and exit the editor. - Create (or add a new entry to) a

servicenode.confconfiguration file. Use theservicenodesetupcommand as follows:

From Tools->Debug Console of the GUI/Qt wallet:From the CLI wallet:servicenodesetup NODE_ADDRESS ALIASWhereblocknet-cli servicenodesetup NODE_ADDRESS ALIASNODE_ADDRESSis the collateral address you created in step 1 above, andALIASis any name you want to give to your Service Node (or group of Service Nodes). The ALIAS you choose here doesn't have to be the same as the ALIAS you chose for your collateral address in step 1, but it is usually most convenient to use the same ALIAS. Theservicenodesetupcommand will create an entry for the Service Node(s) in theservicenode.conffile in your collateral wallet data directory. Example:servicenodesetup BmpZVb522wYmryYLDy6EckqGN4g8pT6tNP snode01 { "alias": "snode01", "tier": "SPV", "snodekey": "02a5d0279e484a3df81acd611e1052d2e0797e796564ecbc25c7fe19f36e9985e5", "snodeprivkey": "PswGMd6faZf1ceLojzGeKn7LQuXVwYgRQG8obUKrThZ8ap4pkRR7", "address": "BmpZVb522wYmryYLDy6EckqGN4g8pT6tNP" } - Copy the snodeprivkey and the address returned in the

previous step and paste them into a temporary text file. You'll

need them later on. (In the above example, snodeprivkey is

PswGMd6faZf1ceLojzGeKn7LQuXVwYgRQG8obUKrThZ8ap4pkRR7, and address isBmpZVb522wYmryYLDy6EckqGN4g8pT6tNP). Note, both the snodeprivkey and the address of your Service Node are also stored at this point in theservicenode.conffile in your collateral wallet data directory, so you can always find them there if you don't want to paste them into a temporary text file to record them. - Restart the Collateral Wallet.

- For GUI/Qt wallet, simply close the Blocknet wallet application, then open it again.

- For CLI wallet (assuming aliases for blocknet-cli and

blocknet-daemon have been set up according to the VPS Staking guide):

Note, you will probably need to wait at least 30 seconds after issuing

blocknet-cli stop blocknet-daemonblocknet-cli stopor closing the GUI wallet app before you'll be allowed to relaunchblocknet-daemonor relaunch the GUI wallet. Just keep trying every 30 seconds or so to relaunch until you no longer get errors.

- Assuming you want to stake your collateral, unlock your staking wallet for staking only:

- For GUI/Qt wallet, unlock your wallet for staking only according to the staking guide for GUI wallet.

- For CLI wallet (assuming an alias for blocknet-unlock has been set up according to the VPS Staking guide):

(Enter your wallet password when prompted.)

blocknet-unlock

- Confirm your wallet is staking with the

getstakingstatuscommand.

From Tools->Debug Console of the GUI/Qt wallet:From the CLI wallet:getstakingstatusWhen this command returns,blocknet-cli getstakingstatus"status": "Staking is active", then you know your wallet is staking properly. Note, you may also want to confirm your staking wallet balance is correct withgetbalance:

From Tools->Debug Console of the GUI/Qt wallet:From the CLI wallet:getbalanceblocknet-cli getbalance - Continue on to Deploy Enterprise XRouter Environment.

Deploy Enterprise XRouter Environment¶

Deploy Enterprise XRouter Environment

Firstly, log in to the Ubuntu Linux server you

set up above, (log in as the

user you created, not as root), then continue with this guide.

Run Global Install Script for Enterprise XRouter Environment¶

If you have never run the Enterprise XRouter Environment (EXR ENV) Global Install script on this server, or if you have not run it since 1 Oct, 2022, copy/paste these commands to run the Global Install script:

curl -fsSL https://raw.githubusercontent.com/blocknetdx/exrproxy-env-scripts/main/env_installer.sh -o env_installer.sh

chmod +x env_installer.sh

./env_installer.sh --install

If the Global Install Script detects docker/docker-compose is already

installed, it simply won't install new version(s). If it detect

the ~/exrproxy-env directory already exists, it will update

it. If it detects ~/exrproxy-env does not already exist, it will

clone it from the Github repository and thereby create it.

IMPORTANT: This Global Install Script will log you out after it finished. Simply log in again after it logs you out, Then follow the steps below.

- Prepare to enter all the details you'll need when you run

the

builder.pyscript:- Most likely,

builder.pywill automatically find your server's public IP address, but it will be good to know your server's IP so you can verify (in a future step) thatbuilder.pyhas found it correctly.

Some options for fetching your Service Node computer's Public IP include:Fetch your Service Node computer's Public IP address, then copy/paste it to a temporary text file for easy access.curl ipconfig.io curl ifconfig.co dig +short myip.opendns.com @resolver1.opendns.com - Make sure you have easy copy/paste access to your Servicenode Private Key and Servicenode Address, which you got earlier from the Collateral Wallet Setup Procedure.

- Think of a name for your Service Node. It doesn't have to be the same name you chose to label the address of your Service Node during the Collateral Wallet Setup, but it's often convenient to use the same name. Note, there should be no spaces in the names you choose.

- Think of a name and a password for the RPC user your Service Node will use when communicating with the services/coins it supports.

- Most likely,

- Change directory to your local

exrproxy-envrepository (exrproxy-envis located in~by default.):cd ~/exrproxy-env - Update your local repository and launch the

SNode Builder:

Assuming you have previously run the Global Install Script on this server, you can update your local environment repository and launch the SNode builder tool with this command:The./exr_env.sh --update --builder ""--updateparameter updates the local repository.

The--builder ""parameter launches thebuilder.pytool when the update is complete. If you prefer, you can leave off the--builder ""parameter, then call./builder.pystandalone after./exr_env.sh --updatecompletes, like this:IMPORTANT: When passing parameters to./exr_env.sh --update ./builder.py./builder.pythrough./exr_env.sh, the parameters of./builder.pymust follow the--builderparameter and they must all be enclosed in double quotes ("). For example:Parameters passed to./exr_env.sh --builder "--deploy" ./exr_env.sh --builder "--source autobuild/sources.yaml" ./exr_env.sh --builder ""./builder.pywhen it's called as a standalone app do not require double quotes around them. For example:./builder.py --source autobuild/sources.yaml --deploy - First,

builder.pywill display some information about your system's available hardware resources. This information will be useful in the SNode configuration process. It will look something like this:

builder.pywill then check to see if the necessary environment, and the necessary versions ofdockeranddocker-composehave been installed on your server. If not, it will instruct you to install them and exit.- Next, it will prompt you for your sudo password. Enter your user password, not your root password.

- Next, it will ask you some questions about how you want to

configure your Service Node. If at any time you want to change

any of the answers you have given while configuring your SNode,

simply issue Control-C to stop the configuration process,

then run

./builder.py(or./exr_env.sh --builder "") again. - The first questions it will ask will be about your Public IP address, Service Node Name, Service Node Private Key, Service Node Address, RPC User and RPC Password. You should have the answers to all these questions already prepared from step 1 above.

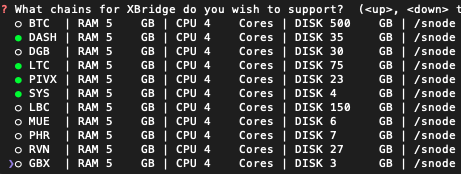

- Next, you'll be presented with a series of queries about which

services you want to support on your Service Node. The

approximate amount of RAM,

# of CPUs, and amount of DISK (SSD) storage space required to support each

service will be displayed next to the service name. Note

that the cumulative DISK storage space requirements of all the services you

select to store data in a particular directory, must be less

than the total amount of DISK storage space available in that

directory. For example, the sum of the DISK requirements for

all the services you select to store data in the

/snodedirectory must be less than the total available space in the/snodedirectory. (Note, the available space on each of your server's mounted directories is displayed when you first runbuilder.py, as in step 4 above.) The RAM and CPU requirements for different services are not necessarily cumulative in the same way. For Example, ETH and AVAX both require 16 GB of RAM, but that doesn't necessarily mean you need 32 GB RAM to support both of them on your SNode. However, if you want to have the ability to sync both of them concurrently, or to guarantee optimal performance, then 32+ GB RAM would be recommended. -

The first services you'll be given the option to support will be the XBridge SPV blockchain services. In the following example, SPV blockchains DASH, LTC, PIVX and SYS have been selected to be supported as XBridge services:

Next to each XBridge service in the list, the approximate RAM, # of CPU cores and DISK requirements for the blockchain are displayed. The default data mount directory (/snodein this example) is also displayed for each blockchain. (In a later step, you'll be given the option to change the data mount directory for specific services.) If you want to change the global default data mount directory for all services you'll deploy, you can change it from/snodeto some other directory by following this procedure:Change Global Default Data Mount Directory.

- Issue Control-C to stop

builder.pyif it's currently running. - Make a copy of

autobuild/sources.yaml. For example:cp autobuild/sources.yaml autobuild/my_custom_dir_sources.yaml - Use a simple editor like vi

or

nano,

to edit the copy you just made. For

Example:

vi autobuild/my_custom_dir_sources.yaml - In the editor, search for all occurrances of

/snodeand replace them with whatever new global default you want for your data mount dir. - Save the file and exit the editor.

- Run

./builder.py, passing it a parameter to specify the file you just edited as the source of available coins/services. For example:./builder.py --source autobuild/my_custom_dir_sources.yaml

- Issue Control-C to stop

-

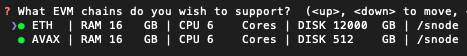

Next, you'll be given the option to choose which EVM blockchains you want to support. In the following example, both ETH and AVAX EVM blockchains are selected to be supported:

The HW requirements and default data mount directory (/snodein this example) are displayed for each blockchain. Note: The HW requirements listed next to each EVM chain assume the EVM chain will be hosted internally, on the EXR ENV server itself. Supporting an externally hosted EVM does not add any HW requirements to the EXR ENV server. (In a later step, you'll be given the option to specify the Host IPs of the EVM blockchains your SNode will support externally.) - If you chose to support EVM blockchains, you'll be given the option to support XQuery and/or Hydra services for each of the EVMs you'll support.

-

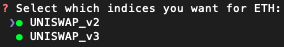

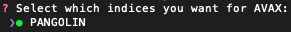

If you chose to support XQuery, you'll be given the option to select which indices you want XQuery to support for each EVM you'll support. Supporting all available indices is recommended. For example:

-

Next, if you chose to support XQuery and/or Hydra services, you'll be prompted to enter the US dollar amounts you want to charge for the services you chose to support. If you chose to support XQuery services, you'll be prompted for how many USD you want to charge for access to XQuery. If you chose to support Hydra services, you'll be prompted for how many USD you want to charge for access to Hydra services at tier1 and tier2 levels. (You won't be prompted for tier2 level pricing if you didn't elect to support ETH because tier2 level only applies when ETH is supported.) The USD amounts you enter are what your SNode will charge for 6,000,000 API calls. Pricing in terms of 6,000,000 API calls makes it convenient to compare your pricing with that of other similar services. Default values are recommended. See Hydra/XQuery Projects API for definitions of tier1 and tier2 service levels.

Note: Entering0for any of the USD amounts you're prompted for will allow clients of your SNode to create projects which have 10,000 free API calls to the service(s) for which you are charging0USD. - Next, you'll be prompted to enter the discount percentages you want to offer clients for payments in aBLOCK, aaBLOCK or sysBLOCK. These payment discounts are relative to the US dollar amounts entered in the previous step. The idea of giving discounts to clients paying with aBLOCK, aaBLOCK or sysBLOCK comes from the idea that we want to encourage payment in various forms of BLOCK in order to increase demand for BLOCK. Due to high eth gas fees currently associated with paying in aBLOCK, the default discount for aBLOCK is currently set at 20%. Default values are recommended for all discounts.

- Next, you'll be asked if you want to support UTXO_PLUGIN service. Supporting this service requires about 32 GB RAM, 8 CPU cores and 200 GB of disk space. It doesn't yet offer any financial reward, though a system of rewards for supporting it is planned. Supporting this service does help to make XLite more reliable by increasing the number of data sources from which XLite can achieve an XRouter consensus response when fetching data for the chains it supports.

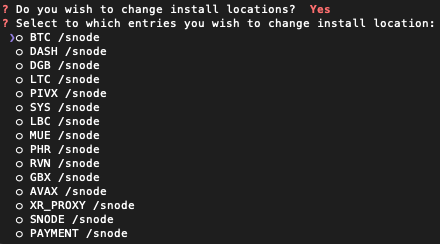

- Next, you'll be asked, "Do you wish to change install

locations?" Type "y" here if you want to change the data

mount directory of any service from

/snodeto some other directory. This option can be useful if, for example, you have a separate, very large, fast disk dedicated to supporting ETH, a service which requires large, fast NVMe SSD storage. Selecting "y" here will display a selection menu which displays the current data mount directory for each service and allows you to select the services for which you want to change the data mount directory. The selection menu will look something like the following:

- Next, a storage space calculations table will be displayed

which can help you confirm that your server's storage space

resources are capable of supporting all the services you've elected

to support. The table will look something like this:

On analyzing this table, if you determine that your server's storage space resources are not capable of supporting all the services you've elected to support, issue Control-C to stop the configuration process, then run./builder.py(or./exr_env.sh --builder "") again.

There are 6 columns in the storage space calculations table:- Directory: This column displays the directory in which the data for the service will be stored, called the data mount directory.

- Available: This column displays the available disk space in the data mount directory of the service.

- Required: This column displays the approximate space required to host the blockchain of the service.

- Existing dir: If a previously synced blockchain of the service was

found in the data mount directory of the

service, this column displays the subdirectory in which

the synced blockchain

was found (e.g.

BTC,LTC) and size of that synced blockchain (e.g.456.64 GB,76.95 GB). If none was found, it displays no subdirectory and0 GBfor size. - Checks: This column displays a green PASS if

the data mount directory of the service has enough

space to host the service. Otherwise, it displays

a red WARNING.

Note: A WARNING message in the Checks column should only be ignored if you are certain the value displayed in the Required column is too large/not accurate, and you have carefully calculated that all your selected services will actually fit on the selected data mount directories of your system. - Requirement Calculations: This column displays the

calculations performed to determine if green PASS or

red WARNING should be displayed in the Checks

column.

If the calculation, "Available - (Required - Existing dir)" yields a positive number, PASS is displayed; Otherwise, WARNING is displayed. - At the bottom of the storage space calculations table is a row titled, TOTAL, which gives a cumulative summary of all the rows above.

- Finally, you'll be given the option to use a unique

auto-generated file name, or a user-specified file name for

storing the SNode configuration information you've

entered. If, for example, you enter the file name

latestthen a file containing your SNode configuration will be generated in theinputs_yamldirectory, and the file will be named,latest.yaml(i.e. your SNode config will be stored asinputs_yaml/latest.yaml). - (Informational) In addition to being stored in the

.yamlformat mentioned in the previous step, your SNode configuration will also be stored in a processed form in the file,docker-compose.yml.docker-compose.ymlwill then be used by the./deploy.shscript when you're ready to deploy your Service Node.

Note for advanced users: If you ever want to manually modify the.yamlfile mentioned in the previous step, then use your modified.yamlfile to generate a newdocker-compose.ymlfile, you can do so using the--yamlparameter like this:./builder.py --yaml inputs_yaml/latest.yaml -

Before you deploy your Service Node, you may want to review the SNode config file generated by

builder.py(e.g.inputs_yaml/latest.yaml) to confirm that everything is configured as intended. For example, you may want to review the data mount directory (data_mount_dir) for the services which require large amounts of disk space to confirm the specifieddata_mount_dirhas enough disk space available to support the service. If the configuration looks good, you can launch your Service Node by issuing the following command:Tip: If you pass the./deploy.sh--deployparameter to./builder.py, it will automatically call./deploy.shafter it finishes generating the SNode configuration files.

Examples:

Most likely you won't need to follow the remaining steps below if you have followed them previously and you're simply reconfiguring your SNode on this run. However, if your SNode has been offline for a while, you may need to reregister it as per steps 26-30 below. You can check if your SNode needs to be reregistered by following steps 29 & 30 below../builder.py --deploy ./exr_env.sh --builder "--deploy"Didn't see an option to support the service or coin you want to support?

Options to support more services/coins are continuously being added. The goal is that Service Nodes will have an option to support every coin supported by BlockDX, which means every coin listed in Blocknet manifest-latest.json. Service Nodes will also soon have the option to support a wide variety of EVM blockchains, like Ethereum/ETH, Avalanche/AVAX, Binance Smart Chain/BSC, Fantom/FTM, Solana/SOL, Polkadot/DOT, Cardano/ADA, etc.

Tip: How to Reset Configuration Defaults.

builder.pyremembers previous SNode configuration choices. Usually this is quite handy and desirable, but sometimes it's useful to make it forget previous configuration choices. There are 5 filesbuilder.pyuses to remember previous configuration choices made by the user:.env,.known_hosts,.known_volumes,.cacheand.cache_ip. If you want to reset any of the default valuesbuilder.pypresents to you during the configuration process, deleting (or renaming) one or more of these files before running./builder.pywill likely do the trick. You can also reset all of these files at once like this:./builder.py --prunecache-

.env- remembers your Public IP address, Service Node Name, Service Node Private Key, Service Node Address, RPC User and RPC Password. -

.known_hosts- remembers the IP addresses of any externally hosted EVM chains. -

.known_volumes- remembers any special data mount directories you chose for specific services. -

.cache- remembers choices you made about which services to support, and how to set up payments -

.cache_ip- remembers the IP addresses assigned to docker containers on the last deployment (so it can try to assign the same IP addresses again if they are available).

Warning: Only expose Hasura GUI XQuery port to restricted hosts, if ever.¶

Warning: Only expose Hasura GUI XQuery port to restricted hosts, if ever.

It is a security risk to leave Hasura GUI Console port exposed to all hosts. By default, this port is not exposed to outside hosts. However, there may be occassions when an advanced SNode operator wants to expose the Hasura GUI Console port to a select set of outside hosts for a time. For example, it could be useful to allow a client to design an SQL query to the XQuery service on your SNode using the convenient Hasura graphical SQL query interface. See XQuery Hasura GUI Console for instructions on how to view the XQuery Hasura GUI Console in a browser.

If you want to expose the Hasura GUI Console port on your SNode, edit the following section indocker-compose.ymljust before you run./deploy.sh:Uncomment the last two lines of this section, like thisxquery-graphql-engine: image: hasura/graphql-engine:v2.0.10 hostname: graphql-engine #ports: # - "8080:8080"To restrict access to port 8080 to a limited set of hosts, you can use uncomplicated firewall (ufw)xquery-graphql-engine: image: hasura/graphql-engine:v2.0.10 hostname: graphql-engine ports: - "8080:8080" -

-

If you are trying to add Service Node support for a new coin which is not yet listed in Blocknet manifest-latest.json configuration file, please refer to the Listing Process for general information on listing a new coin. Then, to add support for the new coin on your Service Node, follow this procedure:

Add Support for a New Coin or a New Config of a Coin.

- Make a copy of

autobuild/sources.yaml. For example:cp autobuild/sources.yaml autobuild/custom.yaml - Use a simple editor like vi

or

nano,

to edit the copy you just made. For

Example:

vi autobuild/custom.yaml - In the editor, duplicate the entry for some common coin (e.g. LTC), then modify the duplicate entry so that it references the name and docker image of the new coin you want to support.

- Save the file and exit the editor.

- Run

./builder.py, passing it two parameters: One to specify the file you just edited as the source of available coins/services, and one to instruct it to reference a configuration repository which contains configuration data for the new coin. For example:NOTE: The URL passed via the./builder.py --source autobuild/custom.yaml --branchpath https://raw.githubusercontent.com/ConanMishler/blockchain-configuration-files/bitcoin--v0.22.0.conf--branchpathparameter must be in raw form, as in the example above. - Launch your SNode by issuing the following command:

./deploy.sh

- Make a copy of

-

Advanced users may want to deploy a Testnet SNode, a Trading Node, or a Testnet Trading Node instead of a regular SNode. These 3 special kinds of nodes are defined here.

Deploy a Testnet SNode, a Trading Node, or a Testnet Trading Node.

- Make a copy of

autobuild/sources.yaml. For example:cp autobuild/sources.yaml autobuild/custom.yaml - Use a simple editor like vi

or

nano,

to edit the copy you just made. For

Example:

vi autobuild/custom.yaml - In the editor, search for the following text

then change SNODE to one of the following, depending which type of node you want to deploy:

- name: SNODE- testSNODE

- TNODE

- testTNODE

- Save the file and exit the editor.

- Run

./builder.py, passing it a parameter to specify the file you just edited as the source of available coins/services. For example:./builder.py --source autobuild/custom.yaml - Launch your node by issuing the following command:

./deploy.sh

- Make a copy of

-

(Recommended for servers with less than 32 GB RAM or less than 10 CPU cores.) If your server HW resources are somewhat limited, it is recommended to deploy certain SNode services in stages. The reason is because some services are known to require large amounts of RAM and/or I/O bandwidth while they are syncing their respective blockchains. ETH, AVAX, LBC and DGB all fall into this category. For this reason, it is recommended not to run more than one of those 4 while any one of them is syncing on a server with limited HW resources. For example, it's best not to run LBC or DGB while AVAX is syncing, or to run AVAX or DGB while LBC is syncing. To deploy SNode services in stages, simply limit which services you select to support the first time you run

./builder.py, then run./builder.pyagain after the resource intensive blockchain(s) have synced, this time selecting additional services to support. - (Informational) Once your SNode is configured and deployed, you should see the scripts do their magic and launch docker

containers

for all the services you configured your Service Node to

support. You can see all the running docker containers by

issuing the command:

This command will display the CONTAINER ID, the IMAGE used to build it, the COMMAND running in the container, when it was CREATED, its current STATUS, which PORTS it uses, and any NAMES assigned to it.

docker ps - To complete the Service Node deployment, we'll

need to know either the CONTAINER ID or container NAME of the

Service Node container. For that, we can filter the above

docker psoutput throughgreplike this:That command should return information about the snode container, something like this:docker ps | grep snodeThe first item returned in this example (f9b910221ca2 blocknetdx/servicenode:latest "/opt/blockchain/sta…" 26 hours ago Up 26 hours 41412/tcp, 41414/tcp, 41419/tcp, 41474/tcp exrproxy-env-snode-1f9b910221ca2) is the CONTAINER ID, and the last item returned (exrproxy-env-snode-1) is the NAME of the container. Either of these two values can be used to access the snode container. -

It will take 3.5+ hours for the Blocknet blockchain to sync in your snode container. Periodically monitor the current block height of the Blocknet wallet running in the snode container by issuing the following command:

Note, here we are executing the command,docker exec exrproxy-env-snode-1 blocknet-cli getblockcountblocknet-cliwithin theexrproxy-env-snode-1container, which is the name we found for the snode container in the previous step. Also note, initial calls togetblockcountmay return errors until headers finish syncing. This is normal and nothing to be concerned about.Tips for monitoring block height during syncing, and generally accessing blocknet-cli more easily.

To make access to the

blocknet-cliprogram more convenient, you may want to create an alias something like the following:If you add that alias toalias snode-cli='docker exec exrproxy-env-snode-1 blocknet-cli'~/.bash_aliases(or any file sourced on login), it will be defined automatically every time you login to your Linux system. Another idea is to create a small Bash Shell script something like this:If you create such a shell script, give it a name like#!/bin/bash docker exec exrproxy-env-snode-1 blocknet-cli $*snode-cli, give it executable permissions (chmod +x snode-cli), then move it to some directory in your $PATH, then you can use the Linuxwatchutility like this:(Enterwatch snode-cli getblockcountecho $PATHto see which directories are in your $PATH. Enterman watchto learn about thewatchutility and its options.) -

When the block height in the snode container matches that of the Blocknet blockchain explorer, your Service Node wallet is fully synced and you can now activate your Service Node as follows:

- On your Collateral Wallet, issue the command,

servicenoderegister. If your Collateral Wallet was set up according to the VPS Staking guide, and the alias forblocknet-cliwas also created according to that guide, you can issue theservicenoderegistercommand as follows:Otherwise, if your Collateral Wallet is a GUI/Qt wallet running on a different computer, simply enterblocknet-cli servicenoderegisterservicenoderegisterin Tools->Debug Console of your Collateral Wallet. - On your Service Node Wallet, issue the

servicenodesendpingcommand like this:(Note, if you created thedocker exec exrproxy-env-snode-1 blocknet-cli servicenodesendpingsnode-clialias or shell script as suggested in the Tip above, you can enter simply,snode-cli servicenodesendping.)

- On your Collateral Wallet, issue the command,

- On your Service Node Wallet, check to confirm your Service Node is running and supporting all the right coins/services, like this:

This command should return

docker exec exrproxy-env-snode-1 blocknet-cli servicenodestatus"status": "running",and also the corrrect/expected list of supported services. - You can also verify your Service Node is visible on the network by issuing the following command on your Collateral Wallet:

(If your Collateral Wallet is a GUI/Qt wallet, simply enter

blocknet-cli servicenodestatusservicenodestatusin Tools->Debug Console of your Collateral Wallet.) -

(Recommended) Install

fail2banto protect your EXR SNode from malicious http attacks. The following steps are for setting upfail2ban v0.11.1-1onUbuntu 20.04.3 LTS. The steps for setting up other versions offail2banshould be very similar, but they may not be exactly the same.Install

fail2ban- Get the xr_proxy-log-path:

This will return something like the following:

docker inspect exrproxy-env-xr_proxy-1 | grep '"LogPath":'The"LogPath": "/var/lib/docker/containers/6554ded0f7dd2abc0f415a511ff0099c5233fca6e17f2b409e9f40be4d43d9cf/6554ded0f7dd2abc0f415a511ff0099c5233fca6e17f2b409e9f40be4d43d9cf-json.log",/var/lib/docker/containers/<big-hex-number>-json.logpart of what's returned is the xr_proxy-log-path we'll need in future steps, so copy it someplace for future use. - Find your LAN address range.

Your LAN address range can be found by issuing this command:

The

ip addrip addrcommand will return something like this:In the above output, you can ignore item 1: called lo (loopback). You can see that the IP address range of the LAN in this example is displayed in item 2: just after the word, inet:1: lo: mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: wlp58s0: mtu 1500 qdisc mq state UP group default qlen 1000 link/ether 9c:b6:d0:d0:fc:b5 brd ff:ff:ff:ff:ff:ff inet 192.168.1.20/24 brd 192.168.1.255 scope global dynamic noprefixroute wlp58s0 valid_lft 5962sec preferred_lft 5962sec inet6 fe80::bf14:21e3:4223:e5e4/64 scope link noprefixroute valid_lft forever preferred_lft forever192.168.1.20/24. The/24at the end tells us that the first 24 bits of this address define the range of the LAN's subnet mask.192.168.1.20/24then represents the full range of addresses used by the LAN in this example system. Copy whatever value represents the full range of the LAN for your system. We'll need it in a future step. - Intall

fail2ban:sudo apt install fail2ban - Initialize setup

sudo cp /etc/fail2ban/jail.conf /etc/fail2ban/jail.local - Edit config file:

Use a simple text editor like vi or

nano to

edit

/etc/fail2ban/jail.local. For example:sudo nano /etc/fail2ban/jail.local- Within

/etc/fail2ban/jail.local, search for these 3 commented out lines:Note, these 3 lines will be in different parts of the file, not contiguous as they are displayed above.#bantime.increment = true #bantime.factor = 1 #bantime.formula = ban.Time * (1<<(ban.Count if ban.Count<20 else 20)) * banFactor - Uncomment those 3 line by removing the

#at the beginning of each line:bantime.increment = true bantime.factor = 1 bantime.formula = ban.Time * (1<<(ban.Count if ban.Count<20 else 20)) * banFactor - Search for the following line

#ignoreip = 127.0.0.1/8 ::1 - Uncomment this line by removing the

#at the begginning of the line so it looks like this:It will also be good to add the full address range of your LAN, as discovered above in step b, to the end of this line. This will whitelist all the IP addresses of your LAN so they can't get banned. For example, to whitelist all LAN addresses for the example system of step b above, you would simply add a space and thenignoreip = 127.0.0.1/8 ::1192.168.1.20/24to the end of theipignoreline, like this:(Be sure to replaceignoreip = 127.0.0.1/8 ::1 192.168.1.20/24192.168.1.20/24here with whatever LAN address range you discovered for your system in step b above.) - Search for the following line:

Change it to:

banaction = iptables-multiportbanaction = iptables-allports - Search for these 3 lines:

Just below those 3 lines, add the following lines, replacing xr_proxy-log-path with the xr_proxy-log-path you found in step a above:

# # JAILS #After replacing xr_proxy-log-path with the value you found in step a above, the[nginx-x00] enabled = true port = http,https filter = nginx-x00 logpath = xr_proxy-log-path maxretry = 1 findtime = 3600 bantime = 24h [nginx-404] enabled = true port = http,https filter = nginx-404 logpath = xr_proxy-log-path maxretry = 1 findtime = 3600 bantime = 1hlogpath =lines will look something like this:logpath = /var/lib/docker/containers/<big-hex-number>-json.log - Save your edits to

/etc/fail2ban/jail.localand exit the editor.

- Within

- Add Filters:

- Use a simple text editor like vi or nano to

create the file,

/etc/fail2ban/filter.d/nginx-x00.conf. For example:Add the following lines to the file:sudo nano /etc/fail2ban/filter.d/nginx-x00.confThen save the file and exit the editor.[Definition] failregex = ^{"log":"<HOST> .* .*\\x ignoreregex = - Use a simple text editor like vi or nano to

create the file,

/etc/fail2ban/filter.d/nginx-404.conf. For example:Add the following lines to the file:sudo nano /etc/fail2ban/filter.d/nginx-404.confThen save the file and exit the editor.[Definition] failregex = {"log":"<HOST>.*(GET|POST|HEAD).*( 404 ) ignoreregex =

- Use a simple text editor like vi or nano to

create the file,

- Restart

fail2banservice:sudo service fail2ban restart - Verify

fail2banis running properly:sudo service fail2ban status - Check the logs of

fail2ban:(Ctrl-C to exit scrolling logs.)sudo tail -f /var/log/fail2ban.log - Fix iptables. To complete this last step, you must wait

for at least one IP address to get banned in each of the

two jails we created above (

nginx-x00andnginx-404). This may take a few hours, so you may want to just letfail2banrun overnight before coming back to this step. Then check thefail2banlogs to confirm there has been at least one IP banned in each of the new jails:Search withsudo less /var/log/fail2ban.loglessfornginx-404andnginx-x00to confirm at least one IP ban in each jail. Once this has been confirmed, you can fix the system IP tables so thefail2banlogs will no longer print the "Already Banned" messages. Fix the IP tables by issuing these two commands:sudo iptables -I FORWARD -j f2b-nginx-x00sudo iptables -I FORWARD -j f2b-nginx-404

- Get the xr_proxy-log-path:

-

To learn how to add or subtract supported services/coins from your Service Node, and generally navigate and manage the docker containers of your Service Node, continue on to Maintenance of Enterprise XRouter Environment

Maintenance of Enterprise XRouter Environment¶

Maintenance of Enterprise XRouter Environment

Changing Configuration of an Enterprise XRouter Environment

-

With the advent of the

builder.pyscript, the procedure for changing the configuration of an EXR ENV is basically the same as it is for configuring and deploying an EXR ENV for the first time. That procedure is given in the first 21 steps of the Deploy Enterprise XRouter Environment guide. Note, however, if you've subtracted one or more services/coins from your EXR ENV's supported services because you want to save the disk space occupied by those services/coins, please remember that the blockchain data is not deleted/removed when the docker container of a service is removed bybuilder.py. The blockchain data of a service/coin persists in the data mount directory you chose for the service when configuring your SNode. If you kept the default data mount directory, which is/snode, then the blockchain data for DGB, for example, will be stored in/snode/DGB, and it can occupy many GB of space. To find out exactly how much space is being used by each service/coin, you can enter:which will return something like this:sudo du -d 1 -h /snodeSudo is necessary here because57G /snode/LTC 3.6G /snode/MONA 222M /snode/testsnode 3.3G /snode/snode 27G /snode/DGB 16K /snode/xr_proxy 11G /snode/RVN 48M /snode/eth_pymt_db/snodedirectory is owned byroot, not by $USER. So, if you want to stop supporting DGB coin, for example, and you also want to free up the 27GB of space the DGB blockchain is occupying, you'll need to first use./builder.pyto reconfigure and relaunch your SNode without DGB support, then issue the following command to permanently remove DGB blockchain data and free the space it is occupying:sudo rm -rf /snode/DGBWarning: If you've been running a Trading Node on your EXR ENV server, be sure to save any wallet files which may be located in the data mount directory before issuing the the

rm -rfcommand to remove the data mount directory.Warning: Be very careful to enter the

rm -rfcommand very precisely. A typo could be disastrous. Also, be aware that adding support for DGB again after deleting its blockchain data will require the DGB blockchain to sync from scratch.

About docker

Tip: If the blockchain of one of the services in your EXR ENV gets onto a fork, or gets its database corrupted, often the easiest way to get it back on track is to stop the docker container running the service, then remove data mount directory of the service, then start the service again.

You can use ./builder.py to stop the docker container

running the service, or you can

stop the container manually. For example, to manually

stop the DGB container, you would issue this command:

docker stop exrproxy-env-DGB-1

sudo rm -rf /snode/DGB

docker start exrproxy-env-DGB-1

Warning: If you've been running a Trading Node on your EXR ENV server, be sure to save any wallet files which may be located in the data mount directory before issuing the the rm -rf command to remove the data mount directory.

For the most part, the docker commands given thus far in this guide will suffice to manage your EXR ENV. However, if something "out of the ordinary" happens, or if you want to do something fancy with your docker objects, there are a few more things it will be good to know about docker:

- Let's say, for example, you want to interact directly with

the DGB daemon, but you aren't sure the name of the DGB CLI

executable command. Here's one way to find it:

- Start an interactive Bash shell in the DGB container:

You should see a prompt like this:

docker exec -it exrproxy-env-DGB-1 /bin/bashroot@f4c21d83d6fe:/opt/blockchain# - Find which daemons are running in the DGB container:

It should show you something like this:

root@f4c21d83d6fe:/opt/blockchain# ps uaxUSER PID %CPU %MEM VSZ RSS TTY STAT START TIME COMMAND root 1 46.4 28.7 8132896 4726660 ? SLsl 11:37 25:38 digibyted -conf=/opt/blockchain/config/digibyte.conf root 29 1.7 0.0 18248 3280 pts/0 Ss 12:33 0:00 /bin/bash root 39 0.0 0.0 34424 2788 pts/0 R+ 12:33 0:00 ps uax - Note there is one running process called,

digibyted. This is the name of the Digibyte daemon process and it is also a great clue about the name of the CLI command for interacting with the Digibyte daemon. The name of the CLI command can usually be derived from the name of the daemon process by simply replacing thedat the end of the daemon process with-cli, like this:digibyted -> digibyte-cli - If you want to verify there is a

digibyte-clicommand, you can do this:which should return this:which digibyte-cli/usr/bin/digibyte-cli - Now you can interact with the Digibyte daemon as follows:

Alternatively, we can exit the Bash shell running in the DGB container, then execute the

root@f4c21d83d6fe:/opt/blockchain# digibyte-cli getblockcount 13535747digibyte-clicommand from outside the docker container:root@f4c21d83d6fe:/opt/blockchain# exit exit ~$ docker exec exrproxy-env-DGB-1 digibyte-cli getblockcount 13535762

- Start an interactive Bash shell in the DGB container:

- Now let's consider the case where the docker container with

which you want to interact does

not have Bash shell available. This is [edit: was] the case for the Syscoin

container, for example. Attempting to invoke the Bash

shell in the Syscoin container will result in an error:

The solution is to invoke a shell which does exist in the container, like this:

docker exec -it exrproxy-env-SYS-1 /bin/bash OCI runtime exec failed: exec failed: container_linux.go:380: starting container process caused: exec: "/bin/bash": stat /bin/bash: no such file or directory: unknowndocker exec -it exrproxy-env-SYS-1 /bin/sh - Another unique feature of [an earlier version of] the Syscoin container is that the

syscoin-clicommand requires certain parameters to be passed to it in order to work properly. For example:returnsdocker exec exrproxy-env-SYS-1 syscoin-cli getblockcountBut invokingerror: Could not locate RPC credentials. No authentication cookie could be found, and RPC password is not set. See -rpcpassword and -stdinrpcpass. Configuration file: (/root/.syscoin/syscoin.conf)syscoin-clias follows, works properly:Alternatively, this also works:docker exec exrproxy-env-SYS-1 syscoin-cli -conf=/opt/blockchain/config/syscoin.conf getblockcount 1218725Note: The DASH container, and perhaps other containers, will also require these same parameters to be passed to the CLI command. [edit: The latest SYS container does not require the additional parameter]docker exec exrproxy-env-SYS-1 syscoin-cli -datadir=/opt/blockchain/config getblockcount 1218725 - Now let's consider another case where more docker knowledge

could be required. Let's consider the case where you try to

manually stop and remove all the docker containers of your SNode

using

docker-compose downin the~/exrproxy-envdirectory. Consider the example wheredocker-compose downfails to complete due to receiving a timeout error something like the following:Some users have actually seen this exact error when trying to runUnixHTTPConnectionPool(host='localhost', port=None): Read timed out.docker-compose downwhile an I/O intensive process (e.g.lbrycrdd) is running on the system. Here are some potentially helpful suggestions on how to fix/avoid this timeout error. However, oncedocker-compose downhas failed to complete even once, it can leave your docker environment in a state where some docker containers have been stopped, but have not yet been removed. This state is problematic because your next attempt to bring up your EXR ENV withdeploy.shwill give errors saying the container names you're trying to create, already exist. If you suspect such a situation has developed, one thing you can do is to list all docker containers - both running and stopped:If this command shows some containers with a stopped STATUS, then you probably need to remove those stopped containers. Fortunately, docker has a handy prune utility for removing all stopped containers:docker ps -aAlso, if your docker containers, images, volumes and/or networks get messed up for any reason, or if you end up with docker images on your server which are no longer used and taking up space unnecessarily, docker offers a variety of prune utilities to clean up the current state of your docker environment.docker container prune - Note: In the context of an EXR ENV, executing the following 2 command should be

equivalent to

docker-compose down:This trick can be useful, for example, ifdocker stop $(docker ps -q -f name=exrproxy-env_*) docker rm $(docker ps -a -q -f name=exrproxy-env_*)docker-compose.ymlis accidentally reconfigured beforedocker-compose downis executed, causingdocker-compose downto fail. - docker offers a variety of useful utilities for managing and

interacting with various docker objects. To see a list of all

docker options and commands,

enter:

To get help on a specific docker command, enter:

docker --helpFor example,docker COMMAND --helpThere are also docker guides available at https://docs.docker.com/go/guides/.docker export --help